I recently finished a semester-long group project for CSCI E-10 "Introduction to Data Science" at the Harvard Extension School. Each team was asked to design, train, test, and analyze a machine learning model that has real-world utility.

A Colab notebook with source code, visualizations, and more commentary can be found here.

Problem Statement

For a particular airport, airline, month, and year how many flights are expected to be delayed?

Summary

We created a random forest regression model that predicts this using the feature arr_del15 (number of flights delayed more than 15 minutes) as our response variable.

The model uses predictors such as year, month, number of flights, various types of delays (carrier, weather, NAS, security, late aircraft), cancellations, diversions, and different delay durations. This selection indicates a focus on temporal factors and operational aspects of flight schedules as key factors of the response variable.

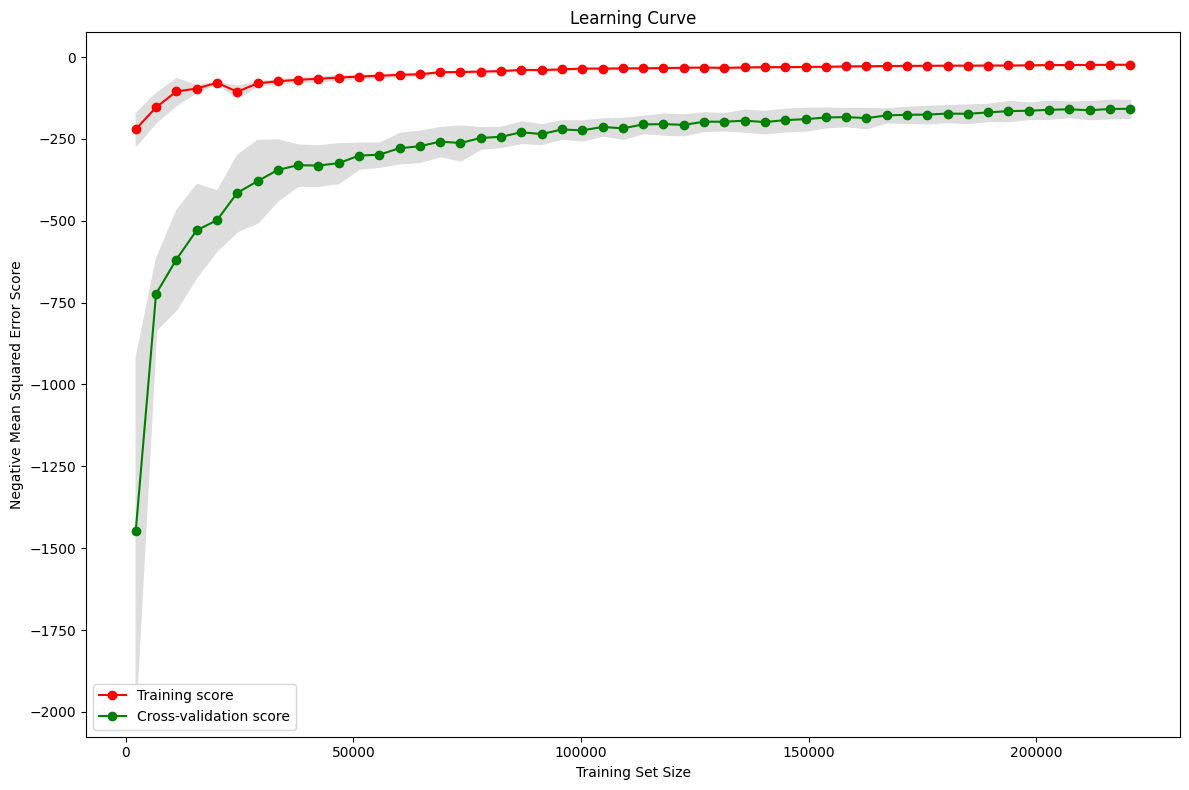

A grid search was conducted to find the optimal parameters for the Random Forest model (max_depth, min_samples_split, min_samples_leaf, and n_estimators). These parameters indicate a relatively complex model.

The Mean Absolute Error (MAE), Mean Squared Error (MSE), and Root Mean Squared Error (RMSE) on the test set were calculated for the Random Forest model. The values (MAE: 2.47, MSE: 127.94, RMSE: 11.31) suggest a reasonable accuracy in prediction. Though a comparision of these values to a differenet model could provide more meaningful interpretation.

The model's residuals increase with the predicted values, indicating a potential issue with heteroscedasticity — the variability of the model's errors is not consistent across all levels of predicted delays. this could be due to the decrease in the number of observations for longer flight delays.

Overall, the Random Forest model has shown a high level of accuracy. However, the insights from the plots suggest that there is room for improvement. The model might benefit from further tuning to mitigate the heteroscedasticity observed. It's also important to consider the computational cost (indicated by the time taken for parameter tuning and model training) when deploying the model in a real-world setting.